How to delete a large number of rows in production

I have been googling about this problem for quite some time now. Finally decided to write how I solved it. Here’s the whole story.

At Commenda, we process thousands of transactions every day. One of the recent projects that I’ve been working on was US Sales tax. It’s a normal ETL flow. The inputs are invoices coming from the user’s accounting software or through CSV files. The source here doesn’t matter. What matters is how quickly we can process this data.

I know you’re probably wondering that this seems like an easy task and I’m whining about nothing. But let me tell you the first problem with this flow. (Fun task: Try to think about all the problems that having thousands of invoices will cause).

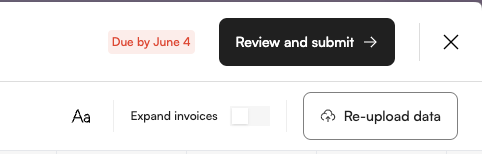

We allow users to reupload their CSV file or change the invoice input source. The UI looks like this

When the user clicks on “re-upload data”. All the invoices that were previously processed should be removed. Then we need to process the data from the new source. Did you notice the line “should be removed”?

That means we will need to delete thousands of invoices and then move to successive processing. Now things start to get interesting.

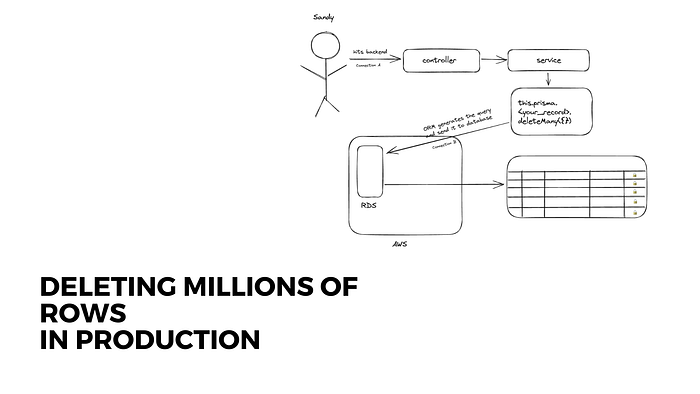

Before we continue, here’s the information about our tech stack: We use PostgreSQL as our database and Prisma for ORM.

Why are certain rows easier to delete than others?

Not all rows take the same amount of time to get deleted. The most straightforward answer is the more connected a record is, the longer it will take to delete. Here’s an explanation

- Foreign relations: If the record is connected to multiple foreign records, it will take longer to delete.

- Cascades: If there are dependent cascades on the record, it will take longer to delete for obvious reasons.

What are the other factors that affect the rate of deletion?

The most obvious factor is the computational resource. We use AWS RDS at Commenda. The instances are configured uniquely to production and staging environments. So the same database processes take different times for different environments as well.

Note: All the benchmarking in this blog is done on my Macbook 14 M2 Pro. So the results will be different on your machine.

On a RDS configured like the above, the results should be almost similar.

The physical location of the database also matters. If the database and server are deployed in the same instance the processing will be faster. Why? Because the time consumed in network hopping will be low. (What? If the server and database are not in the same location, then your request will need to first travel from your server to the database, get processed, and then a bunch of data will need to travel all the way back to your server). How to keep your server and database in the same location? Simply deploy them in the same region, or even better create a VPC.

When should I do this optimization? Do I even need to read this blog?

Good question. Do you know what’s worse than optimization? Premature optimization. If the number of invoices is <~5K you probably don’t need this.

It took around 41725ms (41 seconds) to delete 6.4K invoices with deleteMany. But keep in mind. The invoice model in Commenda is connected to multiple other records like line_items, customers, addresses, etc. So it’s heavily connected.

What are the different ways to delete records?

I thought you would never ask. You, my friend, are in on a treat. There are 3 ways to delete records w.r.t Prisma and in general as well.

- deleteMany (This is your SQL’s DELETE * from TABLE WHERE CLAUSE)

- Paginated deleted (This is the same as above but paginated using skip and take)

- soft delete (This is using a boolean/1 bit column and marking it as true, instead of actually deleting the row)

DeleteMany: This is the simple old-fashioned this.prisma.<your_model>.deleteMany. One thing to note is that a large number of rows will lock your database. If you call deleteMany with a where clause that results in the deletion of 70K rows, they won’t be deleted instantly and the records will be locked. There are 2 ways you would be using deleteMany.

- In a foreground task (when the code is in the path of a controller. A normal API call.)

- In a background task (A cron job, schedules, etc).

No matter what Sandy from the business team tells you, for large number of records don’t ever put a deleteMany in a foreground task. Your 4th grade professor didn’t, so I will explain why time-consuming database queries are bad.

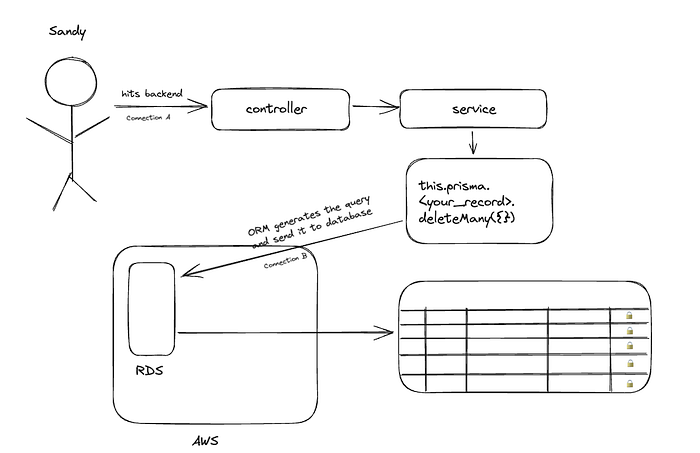

I’ve labeled 2 network calls as Connection A and Connection B. Connection A : Sandy hits the controller. Connection B: Service hits the ORM/RDS

When your database starts, Prisma by default creates a connection pool. 1 connection pool has multiple connections to your database. Why have multiple connections? Each request to the database is one connection. Though Node.js is single threaded still due to its concurrent nature there could be multiple database requests getting processed at the same time. Hence we need more than one connection. Also if you start a connection every time a request is encountered, your response time will be very slow. Why? Because your backend will have to do a network handshake and open a TCP connection with the database for each database request, that’s slow. So the easiest solution is to park some extra open connections beforehand.

So Prisma makes sure that “Connection B” doesn’t break no matter how long the database takes to process the request. Does it mean Connection B can never break or fail? That’s not true. What if you merge something to your prod branch and now a redeployment is happening? The server will die with a SIGKILL. Or if the server is an API server, there will be continuous north-to-south traffic flow, and something else might break that stops your server. In all these cases Connection B will break.

If you have a very time-consuming database process, you will lose the tracking of it and you will be stuck in a very weird state. Also, this can happen if you have written a cron job. A cron job specially handles a large chunk of data and is more prone to failing due to the above reasons.

Once my CTO showed me a piece of code and asked when would it fail, and I said,

"Why would it fail?" He replied, "I don't know, why does anything fail?"

Whenever you are deciding why something would fail - a function call, a loop,

a network call—just remember Murphy's Law.Also, let’s say that connection B only takes 12 seconds. 12 seconds for to and fro your database and service, including the processing time inside the database. The connection A still is only open for 10 seconds. A normal HTTP call will timeout after 10 seconds. Means Sandy will still see a gateway timeout or request timeout error, and the user will now be in a weird state, not being sure whether the process was successful or not.

Hence proved, any database query that can be longer than 8–9 seconds should be handled very carefully.

Paginated Delete: Talk less and show me the code version —

async batchDeleteMarkedForDeletionRows() {

if (this.isBatchDeleteInProgress) {

console.error("An instance of batch delete is already in progress");

return;

}

let deletedCount = 0;

this.isBatchDeleteInProgress = true;

do {

const invoices = await this.prisma.invoices.findMany({

where: {

...insert your where clause

},

take: this.BATCH_DELETE_PAGE_SIZE,

orderBy: {

createdAt: "asc", // This prevents starvation

},

select: { id: true },

});

try {

if (invoices.length > 0) {

const ids = invoices.map(invoice => invoice.id);

const result = await this.prisma.invoices.deleteMany({

where: {

id: { in: ids },

},

});

deletedCount = result.count;

} else {

deletedCount = 0;

}

} catch (err) {

console.error(err);

deletedCount = 0;

}

} while (deletedCount > 0);

this.isBatchDeleteInProgress = false;

}This is how you do a paginated delete in Prisma.

Soft Delete: Soft delete means creating a new bit column in your table, named isDeleted (or whatever you prefer), and then every time any service wants to delete a row, they simply update the column to true instead of actually deleting it. Then a cron job will delete them when the server load time will be lowest. (For example, if your company has an Indian customer base, this could be Indian nighttime). Next time when you read from that model, you make sure you put a where : {isDeleted:false}.

Note: When implementing soft delete, you will have to make that model’s reads and writes modular. Other services shouldn’t be allowed to directly read the model from the database. You can’t just expect all the developers in your team to remember to write isDeleted:false every time they do a read. A good pattern to enforce this is using DAOs. I personally love DAOs but they are hard to implement with Prisma.

How to choose between deleteMany, paginated deletes and soft deletes?

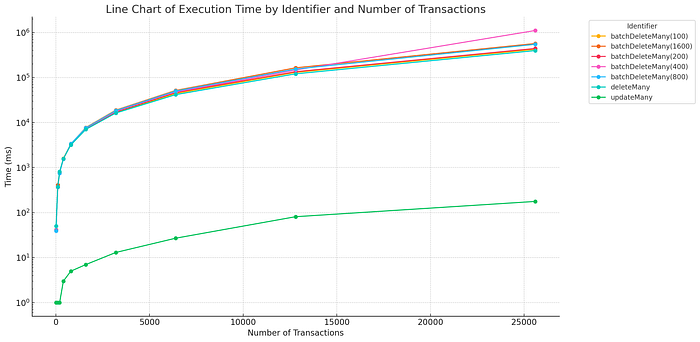

It’s benchMarkOClock. I wrote a script to actually test this and benchmark the results.

What does the script do? It creates N invoices, then deletes them with a method, stores how long it took, and repeats for all methods, and exponentially grows N.

The methods we have

- deleteMany

- updateMany (updateMany is soft delete)

- paginated delete with 100-page size

- paginated delete with 200-page size

- paginated delete with 400-page size

- paginated delete with 800-page size

- and for my fellow geometric progression lovers paginated delete with 1600-page size

If you are an addict, please place your bets already.

Here’s the script if you want to test it yourself.

async function testBatchDeleteMarkedForDeletionRows(pageSize: number) {

if (this.isBatchDeleteInProgress) {

console.error("An instance of batch delete is already in progress");

return;

}

let deletedCount = 0;

this.isBatchDeleteInProgress = true;

do {

const invoices = await this.prisma.invoices.findMany({

take: pageSize,

orderBy: {

createdAt: "asc", // This prevents starvation

},

select: { id: true },

});

try {

if (invoices.length > 0) {

const ids = invoices.map(invoice => invoice.id);

const result = await this.prisma.invoices.deleteMany({

where: {

id: { in: ids },

},

});

deletedCount = result.count;

} else {

deletedCount = 0;

}

} catch (err) {

console.error(err);

deletedCount = 0;

}

} while (deletedCount > 0);

this.isBatchDeleteInProgress = false;

}

function createOneRandomInvoice(): Prisma.PrismaPromise<any> {

return this.prisma.invoice.create({

data : { "this is trade secret. I do not want to be fired." }

});

}

async function createNRandomInvoices(n: number) {

const data = Array.from(new Array(n)).map(_ => this.createOneRandomInvoice());

const batches = batchArray(data, 100);

console.log("started creating transactions");

for (const batch of batches) {

await this.prisma.$transaction(batch);

}

console.log("finished creating transactions");

}

async function test_deletion(numberOfInvoices: number) {

// 1. create N invoices

// 2. check how long does it take to do an updateMany

// 3. Check how long does it take to delete them all at once with deleteMany

// 4. Check how long does it take to delete them using paginated deletion

const { performance: perf } = performance;

// Delete everything in database before the test starts

await this.invoiceService.testBatchDeleteMarkedForDeletionRows(100);

await this.createNRandomInvoices(numberOfInvoices);

const timeA1 = perf.now();

await this.invoiceService.markInvoicesForDeletion({});

const timeA2 = perf.now();

logSalesTaxMessage(`updateMany_${numberOfInvoices}`, (timeA2 - timeA1).toString());

// because the command above doesn't actually delete the transactions and simply marks them for deletion

// we need a script to actually delete them before proceeding to the next stages of testing.

await this.invoiceService.testBatchDeleteMarkedForDeletionRows(100);

await this.createNRandomInvoices(numberOfInvoices);

const timeB1 = perf.now();

const a = await this.prisma.invoices.deleteMany({});

const timeB2 = perf.now();

console.log("deleted with deleteMany", a);

logSalesTaxMessage(`deleteMany_${numberOfInvoices}`, (timeB2 - timeB1).toString());

await this.createNRandomInvoices(numberOfInvoices);

const timeC1 = perf.now();

await this.invoiceService.testBatchDeleteMarkedForDeletionRows(100);

const timeC2 = perf.now();

logSalesTaxMessage(`batchDeleteMany(100)_${numberOfInvoices}`, (timeC2 - timeC1).toString());

await this.createNRandomInvoices(numberOfInvoices);

const timeD1 = perf.now();

await this.invoiceService.testBatchDeleteMarkedForDeletionRows(200);

const timeD2 = perf.now();

logSalesTaxMessage(`batchDeleteMany(200)_${numberOfInvoices}`, (timeD2 - timeD1).toString());

await this.createNRandomInvoices(numberOfInvoices);

const timeE1 = perf.now();

await this.invoiceService.testBatchDeleteMarkedForDeletionRows(400);

const timeE2 = perf.now();

logSalesTaxMessage(`batchDeleteMany(400)_${numberOfInvoices}`, (timeE2 - timeE1).toString());

await this.createNRandomInvoices(numberOfInvoices);

const timeF1 = perf.now();

await this.invoiceService.testBatchDeleteMarkedForDeletionRows(800);

const timeF2 = perf.now();

logSalesTaxMessage(`batchDeleteMany(800)_${numberOfInvoices}`, (timeF2 - timeF1).toString());

await this.createNRandomInvoices(numberOfInvoices);

const timeG1 = perf.now();

await this.invoiceService.testBatchDeleteMarkedForDeletionRows(1600);

const timeG2 = perf.now();

logSalesTaxMessage(`batchDeleteMany(1600)_${numberOfInvoices}`, (timeG2 - timeG1).toString());

}

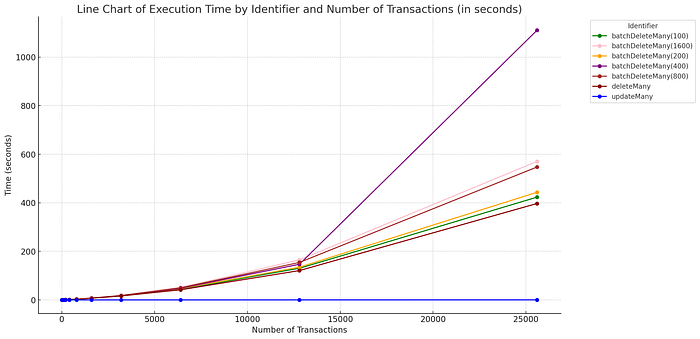

There is a 4-magnitude difference between soft deleting and actually deleting records.

But the above charts weren’t doing the justice to soft delete. So here’s one more

Do you see how flat that line is? I love it. Ultimately we ended up adopting soft delete for this. I have no idea what’s going on with paginated delete (400).

For perspective, it took around 44 minutes to delete 80K transactions with

a deleteMany and 0.624 seconds to do soft delete.So why don’t people just do soft deletes all the time?

They come with their own DX problem. You need to centralize all the reads, you can’t make your code un-modular, you need to be very careful that everyone follows the pattern of reading through the DAO, and you need to schedule jobs and make sure you don’t bloat your database. The scheduled job shouldn’t burden your database during regular hours.

Parting words

I gave you a fun task at the beginning of this blog. The task was to find out all the other problems that come with a large number of invoices/row.

It turns out those problems deserve their own blog. One such problem was, all the invoices were connected to a Company, when the admin tried to delete the Company, it timed out. Later we realized that because there were 0.1 million invoices attached to the company, the call wasn’t getting processed in time.

This was a fun blog to write. I recently worked on creating a robust queue system where even 1 packet loss would mean ****. Maybe I’ll write about it next.